How the Best Teams Think About AI Agent “Mistakes”: A More Effective Framework

As AI agents become more capable, they’re also becoming more embedded in real workflows. That means more enterprise teams are interacting with them. even great agents don’t behave perfectly from day one.

Sometimes responses feel slightly off, a step is missing, or a rule is misapplied.

When this happens, the instinct is often to say:

“It didn’t work.”

or

“Here’s the right answer.”

The best enterprise teams adopting AI understand that building an agent is more like building a great product.

They don’t panic when the agent makes a mistake.

They treat it the way product teams treat bugs, UX issues, or spec gaps: as actionable signals that guide improvement.

But not all errors are the same — and the way you handle them directly affects how effectively you can create the best agent.

Teams that treat every issue identically end up overwhelmed, frustrated, and stuck in slow iteration cycles. Teams that recognize the type of error they’re seeing make faster progress with far less effort.

Here’s the framework they use.

Why AI Agents Need More Than “It Didn’t Work”

In human workflows — code reviews, Google Docs, Slack threads — a single comment box is often enough. You point out what’s wrong, someone fixes it, and everyone moves on.

AI agents are built differently.

Early in an AI deployment, it’s normal for teams to feel flooded with “Mistakes”:

- “Why did it say that?”

- “Why did it ask that?”

- “Why didn’t it follow this rule?”

- “Why is the tone off?”

When everything is lumped together, the team ends up frustrated, and makes AI building feel more chaotic than it actually is.

In practice, AI agent mistakes fall into three predictable categories, each with a different cause and a different fix. A smarter approach starts with identifying which category the issue falls into.

The 3 Types of AI Agent “Mistakes”

Understanding the difference helps determine the path forward in a manageable way.

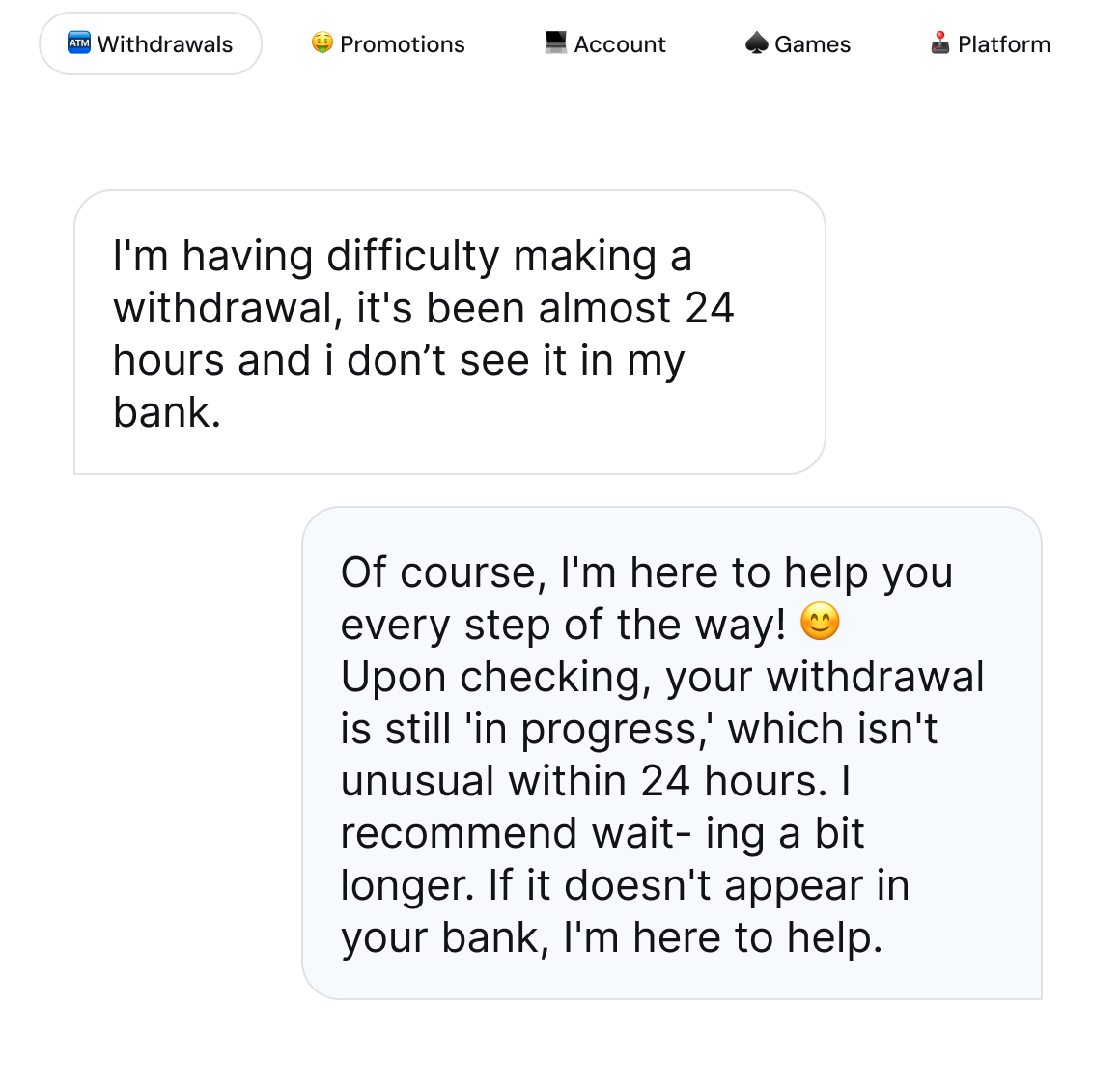

1. Tone & Phrasing Issues

“The information was right — the delivery was off.”

This is the most straightforward category.

The agent:

- Understands the user

- Applies the right logic

- Reaches the correct result

…but expresses itself in a way that’s slightly awkward, overly formal, too casual, or simply not aligned with your brand.

Why it happens

Models optimize for clarity and safety, not brand nuance.

Every brand has different expectations for voice, so minor mismatches appear naturally.

How to think about it

Treat this exactly like coaching a human agent on tone, not substance. Tone fixes improve consistency and brand alignment across all conversations without requiring deeper system changes.

Typical resolution

Provide examples of preferred phrasing or adjust style guidance.

These refinements resolve quickly and improve consistency.

Example:

If the agent says,

“We cannot grant your bonus.”

and you’d rather it say,

“At this time you don’t qualify for a bonus”…just provide that preferred language. Descriptively. Test different prompts until you reach a desired feel.

2. Behavioral & Logic Mistakes (Instruction Following)

“The agent got to the right outcome, but the steps weren’t ideal.”

Here, the agent’s understanding is correct, but its process is slightly off.

Examples:

- Asking too many questions

- Skipping a step your team considers essential

- Confirming something too early

- Taking a longer route to solve a simple problem

Why it happens:

If your preferred process isn’t explicit, the agent generates a reasonable sequence on its own which may differ from your internal playbook. Often what seems like a well written procedure is missing clear instructions that address a specific nuance - that can result in behavior that looks like a “Mistake”.

How to think about it

This is the AI equivalent of a new employee who understands the job but is still learning how your organization prefers to handle certain requests.

Example improvements:

“Before continuing to any further steps make sure that the manager is online then continue to step 2. First do A → then check B → only then confirm C.”

Why this matters:

Without this detail, teams end up playing “whack-a-mole,” fixing one conversation only to see a different unaccounted for outcome from a different conversation. Understanding where the instructions can be more explicit helps teams iterate on their agents without overwhelm.

3. Capability Gaps

“The agent didn’t make a mistake — it simply can’t do that yet.”

Sometimes the issue isn’t behavior or tone. The agent may simply not have:

- The information required

- Access to a needed data source

- The deterministic API necessary

In these cases, the outcome feels wrong, but the underlying problem is that the capability itself is missing.

How to give effective feedback:

Focus on the gap, not the failed interaction.

Example observation:

“The agent didn’t give the available bonus to the player”

Example gap: Because the agent doesn’t have access to available bonus (X) data. To support this workflow, it needs Y capability.”

How to Avoid “Mistake” Fatigue

When teams first adopt AI systems, the volume of “things to fix” or “things that are broken” can feel never-ending. But not all issues are equally important, not all issues are handled the same way, and not all issues deserve the same level of effort.

Here’s how mature AI teams stay productive:

Why This Model Works So Well

Because it reflects the way real work happens.

Just like with humans:

- Tone issues > quick coaching

- Journey issues > a clearer playbook

- Access issues > a better access policy

Once you view mistakes through this lens, they stop feeling like warning signs and start feeling like natural, expected signals that help you refine the system.

Teams who adopt this approach report:

- Less overwhelm during builds

- Faster improvement cycles

- Clearer communication between CX, product, and operations

- Higher confidence in scaling the agent to more use cases

A Quick Habit That Reduces Noise “Mistake” Fatigue

Next time you review a conversation and something feels “off,” pause and ask:

“Is this Tone, Flow, or System Level?”

You’ll be surprised how quickly everything starts to make sense.

And more importantly, you’ll start to see your agent the way high-performing teams do: As a system that needs iteration and clear guidance. It learns, adapts, and improves not as something that must be perfect from day one.